What Is Virtual Production? A Practical Guide for Modern Creatives

So, what exactly is virtual production? In simple terms, it's a way of filmmaking that smashes the physical and digital worlds together in real-time. Forget acting against a vast, empty green screen. Instead, performers are dropped right into a dynamic, interactive digital environment, all displayed on massive LED walls. This means directors can see something very close to the final visual effects while they are shooting, not months down the line in post-production.

A New Era of Filmmaking

Think of it like creating a film inside a live-action video game. It’s a complete departure from the old "we'll fix it in post" mindset, pulling all those big creative decisions to the front of the process. Directors, cinematographers, and actors get immediate visual feedback, allowing them to make critical creative choices on set, with full context. This technology gives creative teams the power to react and adapt in an instant. Want to shift the time of day from high noon to a golden-hour sunset? The virtual environment can be updated in a matter of moments. This level of on-the-fly control was simply impossible before, often demanding expensive reshoots or a mountain of work for the VFX team.

The Core Components

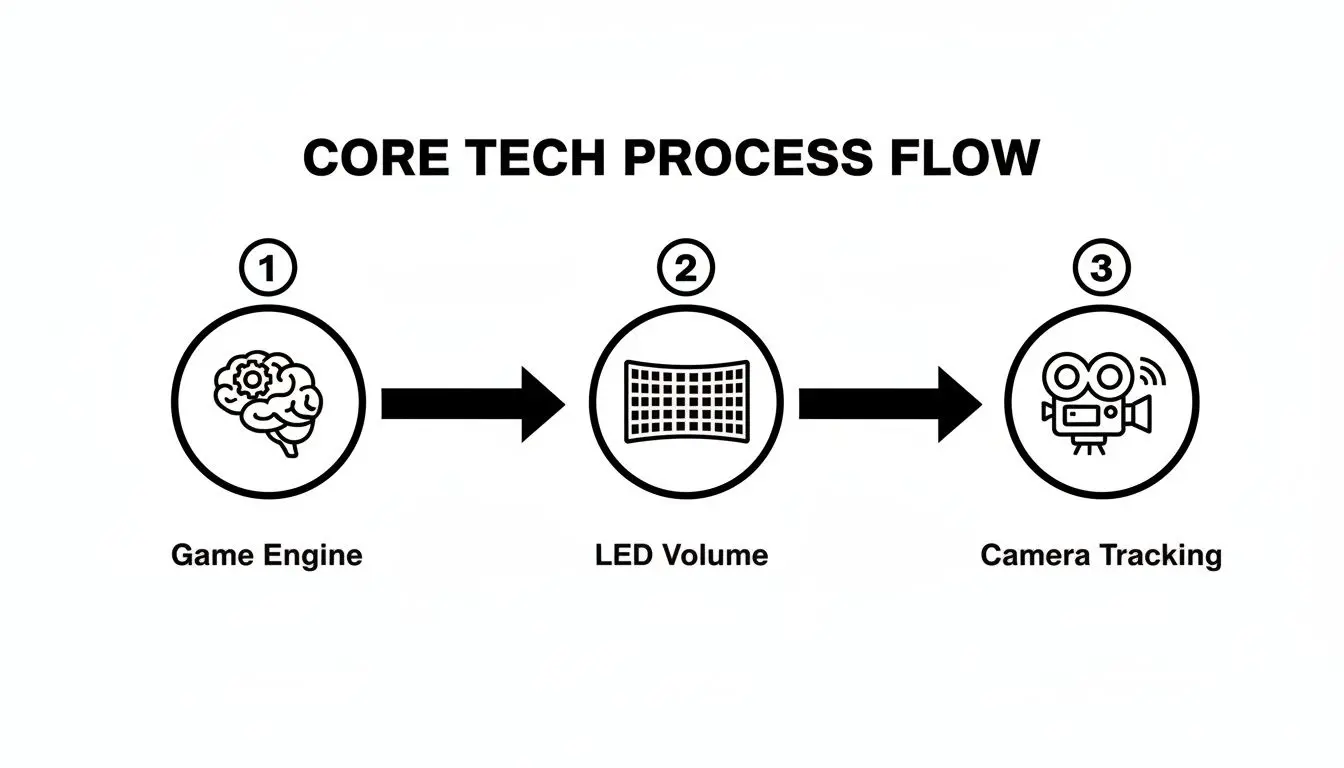

At its heart, this approach is a powerful blend of several key technologies all working in perfect sync. Each piece of the puzzle plays a vital role in creating that seamless bridge between a real-world stage and an imaginary digital one. To get a clearer picture, let's break down the essential tech that makes it all happen. Each component has a specific job, but they all need to communicate flawlessly to create the magic.

Key Components of a Virtual Production Stage

| Component | Function in Virtual Production | Analogy |

|---|---|---|

| LED Volumes | Massive, curved LED screens that display the virtual world. They replace green screens and provide realistic lighting on actors and props. | It’s like the world's most advanced, interactive wallpaper, lighting the room and the scene at the same time. |

| Real-Time Game Engines | Software like Unreal Engine or Unity renders the 3D world instantly, acting as the 'brain' of the operation. | Think of it as the director of the digital world, telling every pixel where to go and what to do in real-time. |

| Camera Tracking | A system of sensors that monitors the camera's exact position, orientation, and lens settings in the physical space. | This is the GPS for your camera, ensuring the digital background always moves perfectly in sync with the real one. |

In essence, these tools come together so directors and performers can interact with digital worlds on set, rather than imagining them against a sea of green. Research from Bournemouth University puts it perfectly, noting that these tools "bring virtual effects to physical sets and make production more flexible and cost-effective" , a massive advantage for any modern workflow. You can learn more about the study on innovation in production here.

This shift from post-production to on-set creation provides creative teams with an unmatched degree of control, speed, and flexibility. It dissolves the barrier between filming and visual effects, merging them into a single, unified process.

The result is a more efficient, collaborative, and creatively liberating way to make content. By capturing final-pixel imagery directly in-camera, productions can slash post-production timelines, sidestep costly location shoots, and give artists the freedom to experiment and perfect their vision live on set. This isn't just a technical upgrade; it's a whole new creative language.

The Core Technologies Behind Real-Time Production

Virtual production isn't one single piece of kit. It’s more like a symphony, where a whole host of interconnected systems work together in perfect harmony. Getting to grips with the individual instruments in this orchestra is the key to understanding how these incredible, real-time digital worlds are brought to life on a physical set. At the heart of it all, you have the LED volume, a massive stage built from high-resolution LED panels. But don't think of these as just fancy background screens. They are dynamic, light-emitting environments, and that’s what gives them a huge advantage over a traditional green screen. These LED walls actively light the entire scene. They cast photorealistic reflections and ambient light onto your actors, props, and the set itself. If a character is meant to be standing next to a virtual bonfire, the warm, flickering light will dance across their face in a completely natural way. Pulling that off in a conventional shoot would mean complex lighting rigs and a mountain of post-production work.

The Brains of the Operation: Game Engines

So what's feeding the visuals to these giant screens? That would be a real-time game engine, with Unreal Engine and Unity being the two heavyweights in the ring. Originally built to create interactive video games, these engines are now the computational heart of a virtual production setup. They’re tasked with rendering unbelievably complex 3D worlds, complete with realistic textures, lighting, and physics, dozens of times every second. This is where the ‘real-time’ part of the equation really clicks. The engine chews through massive amounts of data to generate final-quality images instantly. It means directors and cinematographers can look through the camera's viewfinder and see the finished shot, right there and then. This screenshot from Unreal Engine's website shows just how detailed these digital environments can be when rendered for an on-set LED volume. The image gives you a sense of the sheer level of detail and realism possible, creating a world that actors can genuinely step into and react to. A huge part of this is real-time video generation, where the engine is constantly creating and rendering dynamic video on the fly, often responding to live input from the camera or performers. This immediate feedback loop is what gives crews the freedom to experiment and make creative calls right there on set.

Synchronising the Real and Virtual Worlds

To keep the illusion seamless, the physical camera has to be perfectly synchronised with the virtual camera inside the game engine. This critical task falls to the camera tracking systems. Using a network of sensors, these systems constantly track the precise position, orientation, tilt, zoom, and focus of the real-world camera. This stream of data is fed straight back to the game engine, which then updates the virtual camera's perspective to match it frame for frame.

When the camera operator pans, tilts, or moves through the physical space, the digital background on the LED volume shifts with the correct parallax and perspective, creating a flawless sense of depth.

It's this synchronisation that sells the whole effect. It's what makes it feel like the camera is moving through a real, expansive location, not just a confined studio. If the tracking is off by even a fraction, the illusion shatters instantly.

Bringing Digital Characters to Life

The final piece of the puzzle is Motion Capture (MoCap). This is the process of recording an actor's movements and translating that data onto a digital character model. While it’s not needed for every virtual production, it becomes absolutely essential when a scene calls for a live-action actor to interact with a CGI creature or character. MoCap gives you a far more fluid and believable performance than traditional keyframe animation because it captures all the subtle nuances of human movement. There are a few different ways to get it done:

- •Optical MoCap: Actors wear suits covered in reflective markers, which are tracked by a ring of high-speed cameras.

- •Inertial MoCap: Performers wear suits fitted with small inertial measurement units (IMUs) that track motion without needing any external cameras.

- •Markerless MoCap: This is an AI-driven approach where software analyses video of an actor to extract motion data, no special suit required.

By piping MoCap data directly into the real-time engine, directors can see the digital character performing right alongside the human actors, live on set. This gives immediate context for framing, interaction, and performance, completely changing a process that used to be stuck in a slow, disconnected post-production pipeline. For a deeper dive into how all these elements come together on a practical level, our producer’s guide to real-time VFX offers more insight into managing these complex workflows.

How the Virtual Production Workflow Changes Everything

Making the switch to virtual production isn't just a simple tech upgrade; it fundamentally reshuffles the entire creative deck. The traditional model gets flipped on its head, pulling the lion's share of creative work from the end of the line, post-production, right to the very beginning. This front-loads the process, giving creative teams a level of control that was once unimaginable. It all kicks off in what is now the most crucial phase: Pre-production. This is where the Virtual Art Department (VAD) steps into the spotlight. Long before a single camera rolls, the VAD is busy building the entire digital world, piece by piece. Every virtual tree, building, and texture is meticulously crafted and optimised to run smoothly in the real-time engine. This means that by the time the crew actually arrives on set, the "location" is already built, lit, and ready for action.

The Power of Previsualization

Once the digital world is built, the next step is Previsualization, or ‘previz’ for short. This goes way beyond simple storyboarding. In a virtual production workflow, previz lets the director and cinematographer explore the digital set with virtual cameras. They can literally walk through the environment, test out different camera angles, block complex scenes, and play with lighting. Think of it as a digital location scout and a full-scale rehearsal all rolled into one. This stage offers incredible accuracy, allowing the team to iron out creative kinks and lock down the project's visual language before a single frame is officially captured. That alone massively cuts down on guesswork and expensive changes on set. The whole system relies on the game engine acting as the brain, sending the visuals to the LED volume, while the camera tracking system keeps everything perfectly synchronised.

This setup highlights just how tightly integrated everything needs to be. Each component has to communicate flawlessly to maintain that seamless, real-time illusion.

A New On-Set Experience

When it's time for the Production phase, the feel is completely different from a traditional shoot. Because the final digital background is being displayed live on the LED volume, creative decisions can happen on the fly. A director can ask the VAD team to move a virtual mountain or change the time of day from dusk to noon with just a few clicks. Actors are no longer performing against a vast, empty green screen. They can see their surroundings, react to the environment, and deliver performances that feel much more grounded and believable.

The workflow turns the set into a live, iterative creative space. Tweaks to lighting, set dressing, and even the weather can be made instantly, giving the team a degree of control and immediacy that traditional methods just can't offer.

What’s more, the light from the LED screens is incredibly realistic, wrapping around actors and physical props in a natural way. This creates a seamless blend right there in the camera, a technique known as in-camera VFX (ICVFX).

Streamlining Post-Production

The final stage, Post-production, is where the biggest savings in time and money really become clear. In a conventional pipeline, this is where a massive team of VFX artists would spend months compositing green screen footage, building digital sets from scratch, and painstakingly matching the lighting. With virtual production, a huge chunk of that work is already finished. Since the final visual effects are captured in-camera, the post-production phase is much shorter and more focused. Instead of heavy-duty VFX creation, the work often boils down to clean-up, final colour grading, and editing. This efficiency is one of the most compelling reasons to adopt this new way of working. For producers weighing up the right software for this streamlined pipeline, our guide on Unreal vs Unity for real-time animation offers some helpful comparisons.

The Real Benefits and Honest Trade-Offs

Jumping into virtual production is a major strategic shift, not just a tech upgrade. It offers some truly game-changing advantages, but it also comes with its own set of challenges and completely flips the script on how you budget and plan. Getting your head around this balance is the key to figuring out if it’s the right move for your project. The upsides are seriously compelling. The biggest win has to be the unprecedented level of creative control you get right there on set. Directors and cinematographers can make massive decisions and see the results instantly, playing around with lighting, camera angles, or even the time of day with a few clicks. That immediacy just wipes out all the guesswork you get with traditional green screen. Actors are no longer staring into a green void; they’re reacting to a tangible, visible world, which naturally leads to more grounded and believable performances.

Financial and Logistical Wins

Beyond the creative freedom, virtual production brings some hefty logistical and financial perks to the table. By swapping out costly, unpredictable location shoots for a controlled studio environment, you introduce a whole new level of predictability to your budget.

- •Slash Travel & Logistics: The need to fly a huge crew and ship expensive gear to multiple locations is massively reduced. That means huge savings on flights, hotels, and freight.

- •Weather? What Weather?: Productions are no longer held hostage by the elements. You can hold that perfect "golden hour" light for a 12-hour shooting day, no matter what’s brewing outside.

- •A Radically Shorter Post-Production: Because most of the visual effects are captured right in the camera (what we call ICVFX), the post-production phase gets way shorter and less intense. This means quicker turnarounds for the entire project.

By capturing final-pixel imagery on set, virtual production moves the bulk of VFX work from post-production into a real-time, on-set process. This shift not only accelerates timelines but also de-risks the back end of the project.

This efficiency helps your bottom line and the planet. Virtual production can shrink the costs and risks tied to VFX, which often gobble up 10, 20% of a production's budget. On the green front, research shows that the remote workflows common in virtual production can be up to '130 times more effective' at cutting a project's carbon footprint compared to traditional filming. You can dig deeper into these findings in The Studio Map's UK report.

Understanding the Trade-Offs

But let’s be real, this new way of working isn't a silver bullet. The payoff of a slicker production and post schedule comes at a cost: a massively front-loaded pre-production phase. This is where the whole process gets turned on its head. Long before the cameras even think about rolling, the virtual art department (VAD) has to meticulously build, test, and optimise every single digital asset. It’s a huge upfront investment in both time and specialist talent. On top of that, the initial barrier to entry can be steep. The tech itself, from the high-res LED panels to the rock-solid camera tracking systems, requires a serious capital investment. For most, teaming up with an established studio is a much smarter move than trying to build a stage from scratch. And finally, you've got the people problem. The industry is currently facing a major skills gap. There's a huge demand for a new kind of creative technologist: artists and technicians who are fluent in both the language of filmmaking and the complex world of real-time game engines like Unreal Engine or Unity. Finding and keeping this talent is one of the biggest hurdles right now. You need a crew that can problem-solve live on set, blending artistic vision with technical know-how under pressure. That mix of skills is rare, and everyone is after it.

Where Is Virtual Production Making an Impact?

While it was the big science fiction blockbusters that first showed us what was possible, virtual production is now solving real-world creative and logistical problems across a surprisingly wide range of industries. It’s no longer just a tool for Hollywood. It's become a powerhouse for brands, educators, and creators who need to build immersive worlds with more control and efficiency than ever before. Put simply, this technology is finding a home wherever great storytelling and world-building are essential. The ability to merge physical and digital elements in real-time unlocks entirely new ways to engage an audience, delivering a level of visual quality and on-set agility that used to be completely out of reach.

Film and Episodic Television

This is the one everyone knows, and for good reason. For film and TV, virtual production means directors can shoot fantastical, alien worlds without ever leaving the studio. Actors can be placed on distant planets, inside historical reconstructions, or within impossible buildings, all captured in-camera with perfect, dynamic lighting and reflections. This method completely de-risks complex visual effects shots. Instead of waiting months for a green screen composite and just hoping it will look right in post-production, the entire crew gets instant feedback on set. This helps actors deliver stronger performances because they're reacting to a real environment, and it frees up the camera to move through the digital space just as it would in a physical location.

By providing a tangible, visible world on set, virtual production empowers actors to deliver more grounded performances and gives directors the confidence that their creative vision is being captured perfectly, frame by frame.

Advertising and Commercials

In the breakneck world of advertising, time and precision are everything. Virtual production offers brands total control over their environment, which is a game-changer when shooting commercials. Need to film a car driving during a perfect sunset? With a virtual set, that "golden hour" light can last all day long. This level of control applies to every single visual detail, guaranteeing flawless product placement and brand consistency. It also makes creating assets for a whole campaign much simpler. A single digital set can be quickly tweaked for different markets, seasons, or product colours, all without the cost and logistical nightmare of booking multiple location shoots.

Immersive Experiences and XR

The real-time engines and tracking systems that power virtual production are the very same tools used to build interactive, immersive experiences. This crossover is leading to some genuinely exciting developments in Extended Reality (XR).

- •VR Training Simulations: Sectors like healthcare and engineering are using these techniques to build ultra-realistic training modules. Trainees can practise complex procedures in a safe, controlled virtual space that looks and feels completely real.

- •Location-Based Entertainment (LBE): From interactive museum exhibits to large-scale VR arcades, the tech is powering captivating experiences that blend physical sets with dynamic digital overlays. For instance, our work on the high-throughput mixed reality game Dance! Dance! Dance! shows just how these tools can engage huge crowds at live events.

- •Artistic and Narrative VR: The same pipeline allows for the creation of beautiful, story-driven virtual reality shorts. Our award-winning project Aurora was crafted using these real-time techniques to immerse viewers in a compelling narrative world, from script to spatial sound.

Once you understand what mixed reality is and how it blends worlds, you can see the clear connection from on-set virtual production to fully interactive user experiences. It's a unified toolkit for a new era of digital content.

How to Get Started with Virtual Production in the UK

Dipping your toes into virtual production can feel like a massive leap of faith. But for businesses here in the UK, the path is getting clearer and the potential rewards are bigger than ever. The first question isn't about buying kit; it's a strategic one: do you build an in-house team from the ground up, or do you partner with a specialist studio? Going it alone is a huge commitment. It means a serious investment in talent with a very particular, and very rare, set of skills. We're talking about Unreal Engine artists, virtual production supervisors, and pipeline technical directors , experts who are in incredibly high demand. These are the people who can truly merge the creative soul of filmmaking with the hardcore technical demands of real-time 3D engines. For most businesses, finding an established studio to partner with is simply the most practical and sensible way forward. A specialist partner comes with a battle-tested pipeline, all the eye-wateringly expensive technology, and, most importantly, the experienced crew ready to hit the ground running. This lets you sidestep the steep learning curve and focus entirely on the creative vision for your project.

Budgeting for a New Workflow

It’s vital to get your head around the budget, because virtual production completely flips the traditional cost structure on its head. The bulk of your spending moves from post-production right to the front of the line, in pre-production. Instead of a long, often unpredictable VFX slog after the shoot, your investment is concentrated upfront in what's known as the virtual art department (VAD). This means all the detailed planning and digital asset creation has to be locked in before you even think about filming. It’s a different mindset, for sure, but the payoff is huge: far greater cost predictability and a massive reduction in the risk of needing expensive reshoots or post-production fixes later on.

Partnering with an experienced studio gives you instant access to a proven workflow. You get a team that knows how to manage this front-loaded process, making sure every penny of your budget is used effectively from day one.

The opportunity in the UK is exploding. The sector was worth USD 213.1 million in 2023 and is on track to hit an incredible USD 695.9 million by 2030. While software is a big part of that, the fastest-growing segment is services, which just goes to show the rising demand for expert studios to handle these complex projects. You can dive into the numbers and see the full picture of the UK's virtual production market growth. Ultimately, choosing the right partner is about finding a team with a deep history in both CGI and real-time production. A studio with decades of experience in animation and visual effects can be your guide, turning what looks like a complex technical mountain into a powerful creative tool.

Your Virtual Production Questions Answered

As virtual production becomes a bigger part of the conversation, it’s natural to have questions. It’s a complex field, and there's a lot of noise out there. So, let’s cut through it and tackle some of the most common things we get asked. Think of this as your straightforward FAQ to clear up any confusion and get you the practical details you actually need.

Isn't Virtual Production Just for Massive Hollywood Films?

Not anymore. While it’s true that blockbusters put this technology on the map, it’s rapidly becoming a practical tool for television, high-end commercials, and even ambitious corporate projects. The game-changer is that you don't have to build a multi-million-pound stage from scratch. By partnering with a specialist studio that already has the pipeline dialled in and an experienced crew on hand, virtual production becomes a genuinely viable, and often surprisingly cost-effective, option for a much wider range of projects and budgets.

How Is This Really Different from a Green Screen?

The difference is night and day. It all comes down to real-time feedback and getting the final shot in-camera. A green screen is a placeholder. You shoot your scene, then spend weeks or months in post-production keying out the green and painstakingly compositing in a digital background.

With an LED volume, the background isn’t just a backdrop; it’s an active part of the scene. It casts realistic, dynamic light and reflections on your actors, sets, and props. Performers have a tangible world to react to, and the final image is captured right there on set, dramatically cutting down your post-production workload.

What Skills Does a Virtual Production Team Actually Need?

A great virtual production crew is a special kind of hybrid. It’s a blend of traditional filmmaking craft with the deep, technical know-how of real-time 3D engines. Having the tech is one thing; knowing how to use it with an artist’s eye is another entirely. You’re looking for a team with:

- •Real-Time Artists who live and breathe game engines like Unreal Engine or Unity.

- •Pipeline Technical Directors, the people who wrangle the complex flow of data and make sure all the different systems talk to each other seamlessly.

- •A Traditional Film Crew (cinematographers, gaffers, grips) who are open-minded and ready to adapt their craft to a new, digitally-driven workflow on set.

At Studio Liddell, we bring decades of experience in CGI, real-time engines, and broadcast production to the table. If you're ready to see how virtual production can bring your vision to life, we're ready to talk. Book a production scoping call